A step-by-step guide on integrating Airbyte into your product. From 0 to 1.

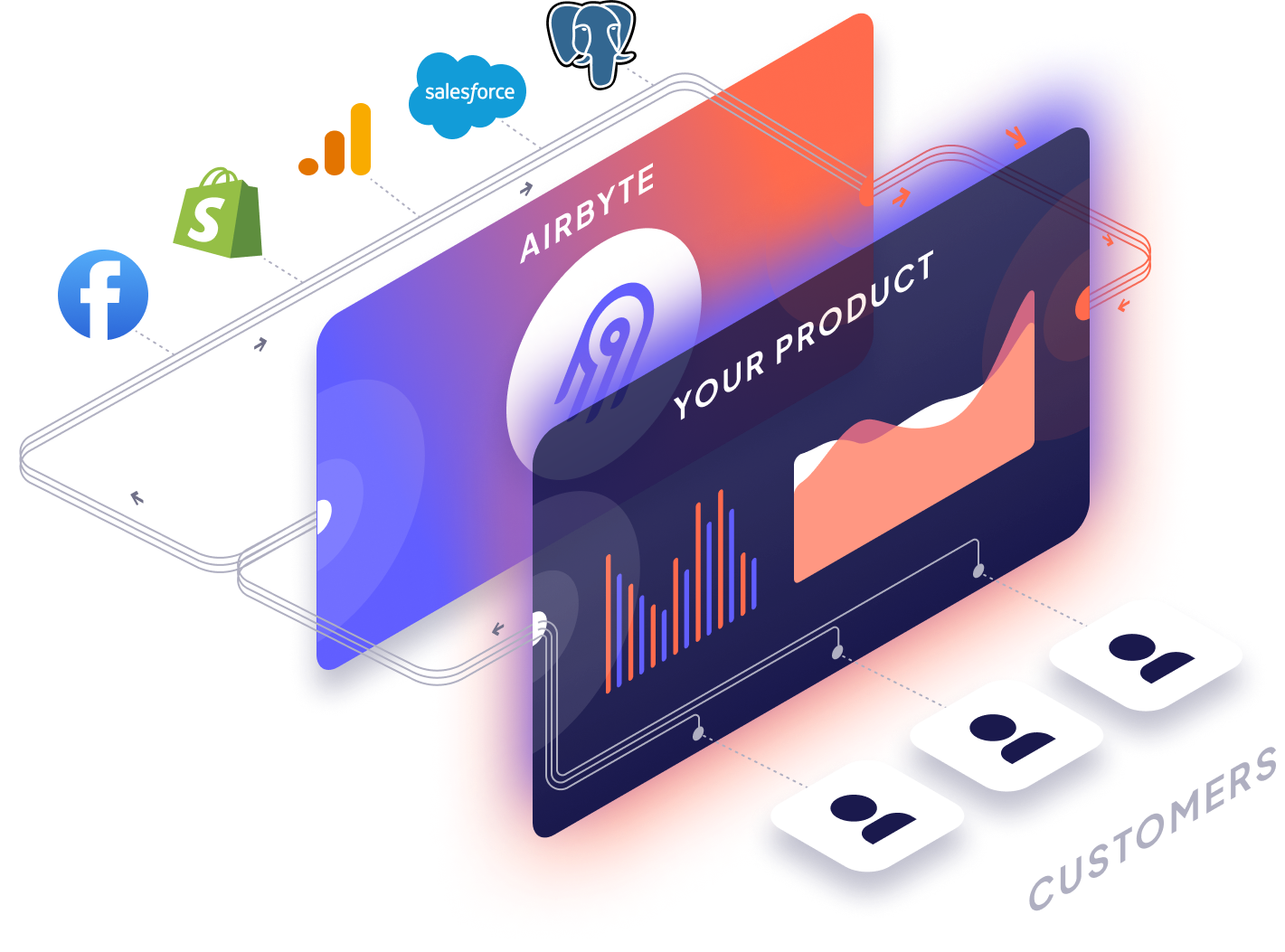

What is Airbyte Embedded?

Airbyte Embedded enables you to add hundreds of integrations into your product instantly. Your end-users can authenticate into their data sources and begin syncing data to your product. You no longer need to spend engineering cycles on data movement. Focus on what makes your product great, rather than maintaining ELT pipelines.

Use Case Example

- An Airbyte Embedded Operator manages an enterprise search platform with thousands of businesses as customers.

- Each of the Operator's customers may have data spread across 5-10 different data sources (ex: Google Drive, Zendesk) that need to be synced to a single destination (ex: S3) before being brought to the retrieval platform.

- Through Airbyte Embedded, the Operator can enable their own customer's end-users to connect their data sources and sync that data to a central Airbyte destination. Such as a database, data warehouse or data lake.

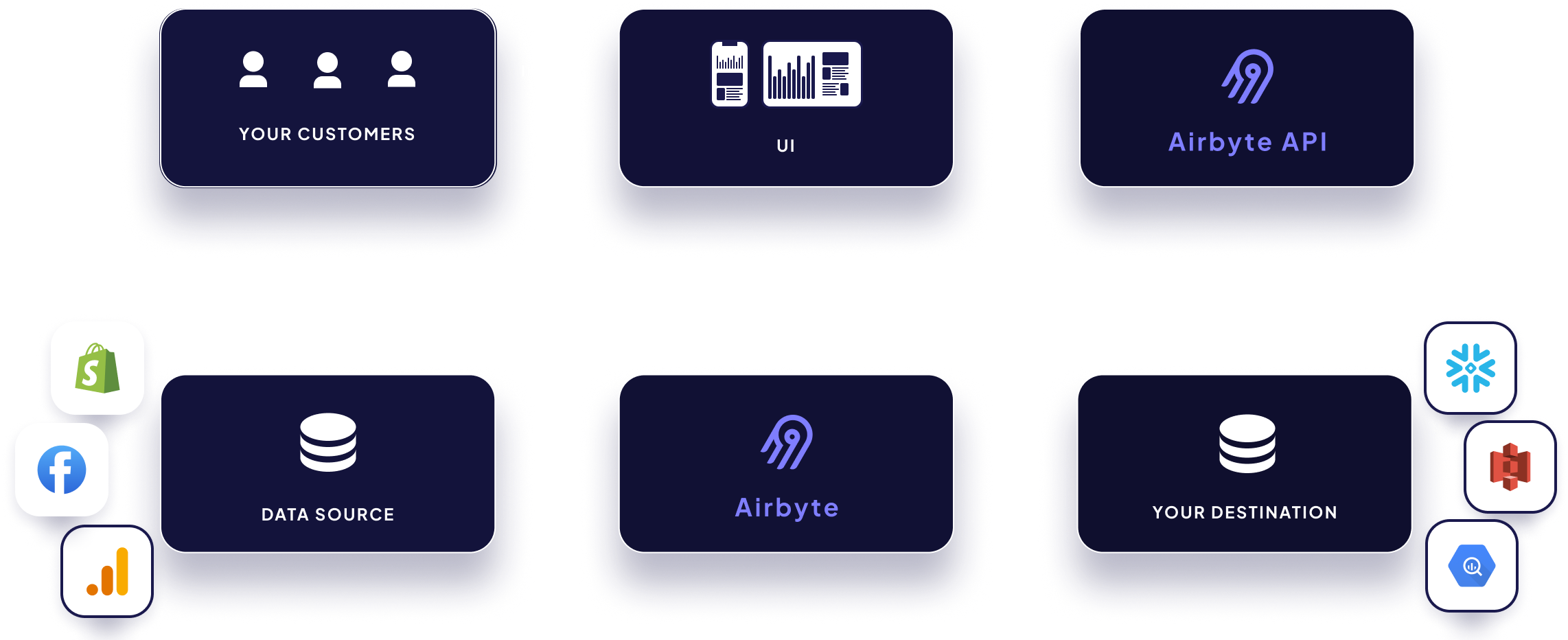

- Behind-the-scenes your product is executing a series of API requests to the Airbyte API.

- The Airbyte API can also be used to update source credentials, trigger data syncs, and other operations that can be done through Airbtye.

- See the full Airbyte connector catalog

- Or build your own Custom Connector to use with Airbyte Embedded.

- Note: You can even "bring your own Secrets Manager" or use Airbyte’s. The choice is yours.

The 2 Versions of Airbyte Embedded

Headless Version (available now) | UI Version (coming mid 2025) |

|---|---|

Power your product's frontend UI with the Airbyte API behind the scenes. Enables you to fully customize the user experience. | A “done-for-you” UI that enables customers to authenticate & sync data. |

Step-by-Step Implementation (Headless Version)

0. Airbyte Terminology

- Source: An API, file, database, or data warehouse that you want to ingest data from.

- Destination: A data warehouse, data lake, or database where you want to load your ingested data.

- Connection: A connection is an automated data pipeline that replicates data from a source to a destination.

- Workspace: A grouping of sources, destinations, and connections. To keep things organized and easier to manage, each business that uses your product should have its sources, destinations, and connections in its own workspace.

- Details on additional core concepts such as Streams, Namespaces, Sync Modes, and Normalization can be found in Core Concepts and the Airbyte Glossary

1. Create your API key

- To get started with Airbyte Embedded, you must reach out to Sales to enable multiple workspaces for your organization.

- Follow the authentication instructions.

2. Create a Workspace

- Navigate to the API doc: Create Workspace

- Enter a name for this workspace and this will generate a fully-formed API request on the right-hand side of the page. This API request can then be copied into your product’s code for execution (or even executed from the docs!)

- An API response will be returned, containing the

workspaceIdof your newly-created source. - You can also utilize any workspaces created through the Airbyte Cloud UI (in addition to those you may create through the Airbyte API)

- Note: To keep things organized and easier to manage, each business that uses your product should have its own Airbyte workspace.

import requests

url = "https://api.airbyte.com/v1/workspaces"

payload = {"name": "Workspace for Customer X"}

headers = {

"accept": "application/json",

"content-type": "application/json",

"user-agent": "string",

"authorization": "Bearer 12345"

}

response = requests.post(url, json=payload, headers=headers)import airbyte

from airbyte.models import shared

s = airbyte.Airbyte(

security=shared.Security(

bearer_auth='12345',

),

)

req = shared.WorkspaceCreateRequest(

name='Workspace for Customer X',

)

res = s.workspaces.create_workspace(req)

if res.workspace_response is not None:

# handle responseOkHttpClient client = new OkHttpClient();

MediaType mediaType = MediaType.parse("application/json");

RequestBody body = RequestBody.create(mediaType, "{\"name\":\"Workspace for Customer X\"}");

Request request = new Request.Builder()

.url("https://api.airbyte.com/v1/workspaces")

.post(body)

.addHeader("accept", "application/json")

.addHeader("content-type", "application/json")

.addHeader("user-agent", "string")

.addHeader("authorization", "Bearer 12345")

.build();

Response response = client.newCall(request).execute();import com.airbyte.api.Airbyte;

import com.airbyte.api.models.operations.CreateWorkspaceResponse;

import com.airbyte.api.models.shared.Security;

import com.airbyte.api.models.shared.WorkspaceCreateRequest;

public class Application {

public static void main(String[] args) {

try {

Airbyte sdk = Airbyte.builder()

.setSecurity(new Security("") {{

bearerAuth = '12345';

}})

.build();

com.airbyte.api.models.shared.WorkspaceCreateRequest req = new WorkspaceCreateRequest("Workspace for Customer X");

CreateWorkspaceResponse res = sdk.workspaces.createWorkspace(req);

if (res.workspaceResponse != null) {

// handle response

}

} catch (Exception e) {

// handle exception

}

}

}terraform {

required_providers {

airbyte = {

source = "airbytehq/airbyte"

version = "0.1.0"

}

}

provider "airbyte" {

bearer_auth = "12345"

}

resource "airbyte_workspace" {

name = "Workspace for Customer X"

}

}package main

import (

"fmt"

"strings"

"net/http"

"io/ioutil"

)

func main() {

url := "https://api.airbyte.com/v1/workspaces"

payload := strings.NewReader("{\"name\":\"Workspace for Customer X\"}")

req, _ := http.NewRequest("POST", url, payload)

req.Header.Add("accept", "application/json")

req.Header.Add("content-type", "application/json")

req.Header.Add("user-agent", "string")

req.Header.Add("authorization", "Bearer 12345")

res, _ := http.DefaultClient.Do(req)

defer res.Body.Close()

body, _ := ioutil.ReadAll(res.Body)

fmt.Println(res)

fmt.Println(string(body))

}const sdk = require('api')('@airbyte-api/v1#4vsz8clinemryl');

sdk.auth('12345');

sdk.createWorkspace({name: 'Workspace for Customer X'})

.then(({ data }) => console.log(data))

.catch(err => console.error(err));require 'uri'

require 'net/http'

require 'openssl'

url = URI("https://api.airbyte.com/v1/workspaces")

http = Net::HTTP.new(url.host, url.port)

http.use_ssl = true

request = Net::HTTP::Post.new(url)

request["accept"] = 'application/json'

request["content-type"] = 'application/json'

request["user-agent"] = 'string'

request["authorization"] = 'Bearer 12345'

request.body = "{\"name\":\"Workspace for Customer X\"}"

response = http.request(request)

puts response.read_bodycurl --request POST \

--url https://api.airbyte.com/v1/workspaces \

--header 'accept: application/json' \

--header 'content-type: application/json' \

--header 'user-agent: string' \

--header 'authorization: Bearer 12345' \

--data '

{

"name": "Workspace for Customer X"

}

'{

"workspaceId": "9924bcd0-99be-453d-ba47-c2c9766f7da5"

}3. Create a Source

- Navigate to our API doc: Create Source

- Search for the Source type you are interested in.

- Enter the required credentials for a source and this will generate a fully-formed API request, which can also be copied into your product’s code for execution.

- An API response will be returned, containing the

sourceIdof your newly-created source. - For creating Sources that require OAuth - see our guide: How to Create OAuth Sources which also includes the option to “Bring your own OAuth credentials”

import requests

url = "https://api.airbyte.com/v1/sources"

payload = {

"configuration": {

"sourceType": "stripe",

"account_id": "acct_123",

"client_secret": "sklive_abc",

"lookback_window_days": 0,

"slice_range": 365,

"start_date": "2023-07-01T00:00:00Z"

},

"name": "Stripe Account",

"workspaceId": "9924bcd0-99be-453d-ba47-c2c9766f7da5"

}

headers = {

"accept": "application/json",

"content-type": "application/json",

"user-agent": "string",

"authorization": "Bearer 12345"

}

response = requests.post(url, json=payload, headers=headers)import airbyte

import dateutil.parser

from airbyte.models import shared

s = airbyte.Airbyte(

security=shared.Security(

bearer_auth='12345',

),

)

req = shared.SourceCreateRequest(

configuration=shared.SourceStripe(

source_type=shared.SourceStripeStripe.STRIPE,

account_id='acct_123',

client_secret='sklive_abc',

lookback_window_days=0,

slice_range=365,

start_date='2023-07-01T00:00:00Z'

),

name='Stripe Account',

secret_id='quae',

workspace_id='9924bcd0-99be-453d-ba47-c2c9766f7da5',

)

res = s.sources.create_source(req)

if res.source_response is not None:

# handle responseOkHttpClient client = new OkHttpClient();

MediaType mediaType = MediaType.parse("application/json");

RequestBody body = RequestBody.create(mediaType, "{\"configuration\":{\"lookback_window_days\":0,\"slice_range\":365,\"sourceType\":\"stripe\",\"account_id\":\"acct_123\",\"client_secret\":\"sklive_abc\",\"start_date\":\"2023-07-01T00:00:00Z\"},\"name\":\"Stripe Account\",\"workspaceId\":\"9924bcd0-99be-453d-ba47-c2c9766f7da5\"}");

Request request = new Request.Builder()

.url("https://api.airbyte.com/v1/sources")

.post(body)

.addHeader("accept", "application/json")

.addHeader("content-type", "application/json")

.addHeader("user-agent", "string")

.addHeader("authorization", "Bearer 12345")

.build();

Response response = client.newCall(request).execute();import com.airbyte.api.Airbyte;

import com.airbyte.api.models.operations.CreateSourceResponse;

import com.airbyte.api.models.shared.Security;

import com.airbyte.api.models.shared.SourceStripe;

import java.time.LocalDate;

public class Application {

public static void main(String[] args) {

try {

Airbyte sdk = Airbyte.builder()

.setSecurity(new Security("") {{

bearerAuth = "12345";

}})

.build();

com.airbyte.api.models.shared.SourceCreateRequest req = new SourceCreateRequest(

new SourceStripe("Stripe", SourceStripe.STRIPE, LocalDate.parse("2023-07-01")) {{

sourceType = SourceBigquery.STRIPE;

account_id = "acct_123";

client_secret = "sklive_abc";

lookback_window_days = 0;

slice_range = 365;

startDate = LocalDate.parse("2023-07-01");

}}, "workspaceId", "d74dd39c-0f5d-42cf-b7c7-0a45626d4368")

CreateSourceResponse res = sdk.sources.createSource(req);

if (res.sourceResponse != null) {

// handle response

}

} catch (Exception e) {

// handle exception

}

}

}terraform {

required_providers {

airbyte = {

source = "airbytehq/airbyte"

version = "0.1.0"

}

}

provider "airbyte" {

bearer_auth = "12345"

}

resource "airbyte_source_stripe" "stripe" {

configuration = {

sourceType = "stripe"

account_id = "acct_123"

client_secret = "sklive_abc"

start_date = "2023-07-01T00:00:00Z"

lookback_window_days = 0

slice_range = 365

}

name = "Stripe"

workspace_id = "9924bcd0-99be-453d-ba47-c2c9766f7da5"

}

}package main

import (

"fmt"

"strings"

"net/http"

"io/ioutil"

)

func main() {

url := "https://api.airbyte.com/v1/sources"

payload := strings.NewReader("{\"configuration\":{\"lookback_window_days\":0,\"slice_range\":365,\"sourceType\":\"stripe\",\"account_id\":\"acct_123\",\"client_secret\":\"sklive_abc\",\"start_date\":\"2023-07-01T00:00:00Z\"},\"name\":\"Stripe Account\",\"workspaceId\":\"9924bcd0-99be-453d-ba47-c2c9766f7da5\"}")

req, _ := http.NewRequest("POST", url, payload)

req.Header.Add("accept", "application/json")

req.Header.Add("content-type", "application/json")

req.Header.Add("user-agent", "string")

req.Header.Add("authorization", "Bearer 12345")

res, _ := http.DefaultClient.Do(req)

defer res.Body.Close()

body, _ := ioutil.ReadAll(res.Body)

fmt.Println(res)

fmt.Println(string(body))

}const sdk = require('api')('@airbyte-api/v1#a933liamtcc0');

sdk.auth('12345');

sdk.createSource({

configuration: {

sourceType: 'stripe',

account_id: 'acct_123',

client_secret: 'sklive_abc',

lookback_window_days: 0,

slice_range: 365,

start_date: '2023-07-01T00:00:00Z'

},

name: 'Stripe Account',

workspaceId: '9924bcd0-99be-453d-ba47-c2c9766f7da5'

})

.then(({ data }) => console.log(data))

.catch(err => console.error(err));require 'uri'

require 'net/http'

require 'openssl'

url = URI("https://api.airbyte.com/v1/sources")

http = Net::HTTP.new(url.host, url.port)

http.use_ssl = true

request = Net::HTTP::Post.new(url)

request["accept"] = 'application/json'

request["content-type"] = 'application/json'

request["user-agent"] = 'string'

request["authorization"] = 'Bearer 12345'

request.body = "{\"configuration\":{\"lookback_window_days\":0,\"slice_range\":365,\"sourceType\":\"stripe\",\"account_id\":\"acct_123\",\"client_secret\":\"sklive_abc\",\"start_date\":\"2023-07-01T00:00:00Z\"},\"name\":\"Stripe Account\",\"workspaceId\":\"9924bcd0-99be-453d-ba47-c2c9766f7da5\"}"

response = http.request(request)

puts response.read_bodycurl --request POST \

--url https://api.airbyte.com/v1/sources \

--header 'accept: application/json' \

--header 'content-type: application/json' \

--header 'user-agent: string' \

--header 'authorization: Bearer 12345' \

--data '

{

"configuration": {

"sourceType": "stripe",

"account_id": "acct_123",

"client_secret": "sklive_abc",

"lookback_window_days": 0,

"slice_range": 365,

"start_date": "2023-07-01T00:00:00Z"

},

"name": "Stripe Account",

"workspaceId": "9924bcd0-99be-453d-ba47-c2c9766f7da5"

}

'{

"sourceId": "0c31738c-0b2d-4887-b506-e2cd1c39cc35"

}4. Create a Destination

- Once you have successfully created your Source, it is time to create a Destination

- Navigate to our API doc: Create Destination

- Search for the Destination type you are interested in.

- Enter the required credentials for a destination and this will generate a fully-formed API request, which can also be copied into your product’s code for execution.

- An API response will be returned, containing the

destinationIdof your newly-created destination.

import requests

url = "https://api.airbyte.com/v1/destinations"

payload = {

"configuration": {

"destinationType": "bigquery",

"project_id": "123",

"dataset_id": "456",

"dataset_location": "US",

"loading_method": {

"method": "GCS Staging",

"credential": {"credential_type": "HMAC_KEY"},

"keep_files_in_gcs-bucket": "Delete all tmp files from GCS",

"file_buffer_count": 10,

"gcs_bucket_name": "airbyte_sync",

"gcs_bucket_path": "data_sync/test"

},

"transformation_priority": "interactive",

"big_query_client_buffer_size_mb": 15,

},

"name": "BigQuery",

"workspaceId": "9924bcd0-99be-453d-ba47-c2c9766f7da5"

}

headers = {

"accept": "application/json",

"content-type": "application/json",

"user-agent": "string",

"authorization": "Bearer 12345"

}

response = requests.post(url, json=payload, headers=headers)import airbyte

from airbyte.models import shared

s = airbyte.Airbyte(

security=shared.Security(

bearer_auth='12345',

),

)

req = shared.DestinationCreateRequest(

configuration=shared.DestinationBigquery(

destinationType='bigquery',

project_id='123',

dataset_id='456',

dataset_location='US',

loading_method={

method='GCS Staging',

credential={

credential_type='HMAC_KEY'

},

keep_files_in_gcs-bucket='Delete all tmp files from GCS',

file_buffer_count=10,

gcs_bucket_name='airbyte_sync',

gcs_bucket_path='data_sync/test'

},

transformation_priority='interactive',

big_query_client_buffer_size_mb=15,

),

name='BigQuery',

workspace_id='9924bcd0-99be-453d-ba47-c2c9766f7da5',

)

res = s.destinations.create_destination(req)

if res.destination_response is not None:

# handle responseOkHttpClient client = new OkHttpClient();

MediaType mediaType = MediaType.parse("application/json");

RequestBody body = RequestBody.create(mediaType, "{\"configuration\":{\"dataset_location\":\"US\",\"loading_method\":{\"method\":\"GCS Staging\",\"credential\":{\"credential_type\":\"HMAC_KEY\"},\"keep_files_in_gcs-bucket\":\"Delete all tmp files from GCS\",\"file_buffer_count\":10,\"gcs_bucket_name\":\"airbyte_sync\",\"gcs_bucket_path\":\"data_sync/test\"},\"transformation_priority\":\"interactive\",\"big_query_client_buffer_size_mb\":15,\"destinationType\":\"bigquery\",\"project_id\":\"123\",\"dataset_id\":\"456\"},\"name\":\"BigQuery\",\"workspaceId\":\"9924bcd0-99be-453d-ba47-c2c9766f7da5\"}");

Request request = new Request.Builder()

.url("https://api.airbyte.com/v1/destinations")

.post(body)

.addHeader("accept", "application/json")

.addHeader("content-type", "application/json")

.addHeader("user-agent", "string")

.addHeader("authorization", "Bearer 12345")

.build();

Response response = client.newCall(request).execute();import com.airbyte.api.Airbyte;

import com.airbyte.api.models.operations.CreateDestinationResponse;

import com.airbyte.api.models.shared.DestinationBigquery;

import com.airbyte.api.models.shared.Security;

public class Application {

public static void main(String[] args) {

try {

Airbyte sdk = Airbyte.builder()

.setSecurity(new Security("") {{

bearerAuth = "12345";

}})

.build();

com.airbyte.api.models.shared.DestinationCreateRequest req = new DestinationCreateRequest(

new DestinationBigquery("BigQuery", DestinationBiqquery.BIGQUERY) {{

destinationType = "bigquery",

project_id = "123",

dataset_id = "456",

dataset_location = "US",

loading_method {{

method = "GCS Staging",

credential {{

credential_type: "HMAC_KEY"

}};

keep_files_in_gcs-bucket = "Delete all tmp files from GCS",

file_buffer_count: 10,

gcs_bucket_name: "airbyte_sync",

gcs_bucket_path: "data_sync/test"

}},

transformation_priority = "interactive",

big_query_client_buffer_size_mb = 15,

}}, "workspaceId", "d74dd39c-0f5d-42cf-b7c7-0a45626d4368");

CreateDestinationResponse res = sdk.destinations.createDestination(req);

if (res.destinationResponse != null) {

// handle response

}

} catch (Exception e) {

// handle exception

}

}

}terraform {

required_providers {

airbyte = {

source = "airbytehq/airbyte"

version = "0.1.0"

}

}

provider "airbyte" {

bearer_auth = "12345"

}

resource "airbyte_destination_bigquery" "bigquery" {

name = "BigQuery"

workspace_id = "9924bcd0-99be-453d-ba47-c2c9766f7da5"

configuration = {

destinationType = "bigquery"

project_id = "123"

dataset_id = "456"

dataset_location = "US"

transformation_priority = "interactive"

big_query_client_buffer_size_mb = 15

loading_method {

method = "GCS Staging"

credential {

credential_type: "HMAC_KEY"

}

keep_files_in_gcs-bucket = "Delete all tmp files from GCS"

file_buffer_count: 10

gcs_bucket_name: "airbyte_sync"

gcs_bucket_path: "data_sync/test"

}

}

}

}package main

import (

"fmt"

"strings"

"net/http"

"io/ioutil"

)

func main() {

url := "https://api.airbyte.com/v1/destinations"

payload := strings.NewReader("{\"configuration\":{\"dataset_location\":\"US\",\"loading_method\":{\"method\":\"GCS Staging\",\"credential\":{\"credential_type\":\"HMAC_KEY\"},\"keep_files_in_gcs-bucket\":\"Delete all tmp files from GCS\",\"file_buffer_count\":10,\"gcs_bucket_name\":\"airbyte_sync\",\"gcs_bucket_path\":\"data_sync/test\"},\"transformation_priority\":\"interactive\",\"big_query_client_buffer_size_mb\":15,\"destinationType\":\"bigquery\",\"project_id\":\"123\",\"dataset_id\":\"456\"},\"name\":\"BigQuery\",\"workspaceId\":\"9924bcd0-99be-453d-ba47-c2c9766f7da5\"}")

req, _ := http.NewRequest("POST", url, payload)

req.Header.Add("accept", "application/json")

req.Header.Add("content-type", "application/json")

req.Header.Add("user-agent", "string")

req.Header.Add("authorization", "Bearer 12345")

res, _ := http.DefaultClient.Do(req)

defer res.Body.Close()

body, _ := ioutil.ReadAll(res.Body)

fmt.Println(res)

fmt.Println(string(body))

}require 'uri'

require 'net/http'

require 'openssl'

url = URI("https://api.airbyte.com/v1/destinations")

http = Net::HTTP.new(url.host, url.port)

http.use_ssl = true

request = Net::HTTP::Post.new(url)

request["accept"] = 'application/json'

request["content-type"] = 'application/json'

request["user-agent"] = 'string'

request["authorization"] = 'Bearer 12345'

request.body = "{\"configuration\":{\"dataset_location\":\"US\",\"loading_method\":{\"method\":\"GCS Staging\",\"credential\":{\"credential_type\":\"HMAC_KEY\"},\"keep_files_in_gcs-bucket\":\"Delete all tmp files from GCS\",\"file_buffer_count\":10,\"gcs_bucket_name\":\"airbyte_sync\",\"gcs_bucket_path\":\"data_sync/test\"},\"transformation_priority\":\"interactive\",\"big_query_client_buffer_size_mb\":15,\"destinationType\":\"bigquery\",\"project_id\":\"123\",\"dataset_id\":\"456\"},\"name\":\"BigQuery\",\"workspaceId\":\"9924bcd0-99be-453d-ba47-c2c9766f7da5\"}"

response = http.request(request)

puts response.read_bodyconst sdk = require('api')('@airbyte-api/v1#vdqyaliamr9js');

sdk.auth('12345');

sdk.createDestination({

configuration: {

destinationType: 'bigquery',

project_id: '123',

dataset_id: '456',

dataset_location: 'US',

loading_method: {

method: 'GCS Staging',

credential: {credential_type: 'HMAC_KEY'},

'keep_files_in_gcs-bucket': 'Delete all tmp files from GCS',

file_buffer_count: 10,

gcs_bucket_name: 'airbyte_sync',

gcs_bucket_path: 'data_sync/test'

},

transformation_priority: 'interactive',

big_query_client_buffer_size_mb: 15,

},

name: 'BigQuery',

workspaceId: '9924bcd0-99be-453d-ba47-c2c9766f7da5'

})

.then(({ data }) => console.log(data))

.catch(err => console.error(err));curl --request POST \

--url https://api.airbyte.com/v1/destinations \

--header 'accept: application/json' \

--header 'content-type: application/json' \

--header 'user-agent: string' \

--header 'authorization: Bearer 12345' \

--data '

{

"configuration": {

"destinationType": "bigquery",

"project_id": "123",

"dataset_id": "456",

"dataset_location": "US",

"loading_method": {

"method": "GCS Staging",

"credential": {

"credential_type": "HMAC_KEY"

},

"keep_files_in_gcs-bucket": "Delete all tmp files from GCS",

"file_buffer_count": 10,

"gcs_bucket_name": "airbyte_sync",

"gcs_bucket_path": "data_sync/test"

},

"transformation_priority": "interactive",

"big_query_client_buffer_size_mb": 15

},

"name": "BigQuery",

"workspaceId": "9924bcd0-99be-453d-ba47-c2c9766f7da5"

}

'{

"destinationId": "af0c3c67-aa61-419f-8922-95b0bf840e86"

}5. Create a Connection

- Once you have successfully created your Source & Destination, it is time to connect them.

- Navigate to our API reference: Create Connection

- Enter the required fields (sourceId & destinationId) and this will generate a fully-formed API request, which can also be copied into your product’s code for execution.

- A Connection will be created with default configuration settings, but these configuration settings can also be customized. Configuration settings manage various aspects of the Connection’s sync, such as how often data syncs and where data is written within your Destination.

import requests

url = "https://api.airbyte.com/v1/connections"

payload = {

"name": "Stripe to BigQuery",

"sourceId": "0c31738c-0b2d-4887-b506-e2cd1c39cc35",

"destinationId": "af0c3c67-aa61-419f-8922-95b0bf840e86",

"schedule": {"scheduleType": "manual"},

"dataResidency": "auto",

"namespaceDefinition": "destination",

"namespaceFormat": "${SOURCE_NAMESPACE}",

"nonBreakingSchemaUpdatesBehavior": "ignore",

}

headers = {

"accept": "application/json",

"content-type": "application/json",

"user-agent": "string",

"authorization": "Bearer 12345"

}

response = requests.post(url, json=payload, headers=headers)

print(response.text)import airbyte

from airbyte.models import shared

s = airbyte.Airbyte(

security=shared.Security(

bearer_auth='12345,

),

)

req = shared.ConnectionCreateRequest(

name='Stripe to BigQuery',

source_id='0c31738c-0b2d-4887-b506-e2cd1c39cc35',

destination_id='af0c3c67-aa61-419f-8922-95b0bf840e86',

schedule={

scheduleType: "manual"

},

data_residency=shared.GeographyEnum.AUTO,

namespace_definition=shared.NamespaceDefinitionEnum.DESTINATION,

namespace_format='${SOURCE_NAMESPACE}',

non_breaking_schema_updates_behavior=shared.NonBreakingSchemaUpdatesBehaviorEnum.IGNORE,

)

res = s.connections.create_connection(req)

if res.connection_response is not None:

# handle responseOkHttpClient client = new OkHttpClient();

MediaType mediaType = MediaType.parse("application/json");

RequestBody body = RequestBody.create(mediaType, "{\"schedule\":{\"scheduleType\":\"manual\"},\"dataResidency\":\"auto\",\"namespaceDefinition\":\"destination\",\"namespaceFormat\":\"${SOURCE_NAMESPACE}\",\"nonBreakingSchemaUpdatesBehavior\":\"ignore\",\"name\":\"Stripe to BigQuery\",\"sourceId\":\"0c31738c-0b2d-4887-b506-e2cd1c39cc35\",\"destinationId\":\"af0c3c67-aa61-419f-8922-95b0bf840e86\"}");

Request request = new Request.Builder()

.url("https://api.airbyte.com/v1/connections")

.post(body)

.addHeader("accept", "application/json")

.addHeader("content-type", "application/json")

.addHeader("user-agent", "string")

.addHeader("authorization", "Bearer 12345")

.build();

Response response = client.newCall(request).execute();import com.airbyte.api.Airbyte;

import com.airbyte.api.models.operations.CreateConnectionResponse;

import com.airbyte.api.models.shared.ConnectionCreateRequest;

import com.airbyte.api.models.shared.ConnectionSchedule;

import com.airbyte.api.models.shared.ConnectionStatusEnum;

import com.airbyte.api.models.shared.ConnectionSyncModeEnum;

import com.airbyte.api.models.shared.GeographyEnum;

import com.airbyte.api.models.shared.NamespaceDefinitionEnum;

import com.airbyte.api.models.shared.NonBreakingSchemaUpdatesBehaviorEnum;

import com.airbyte.api.models.shared.ScheduleTypeEnum;

import com.airbyte.api.models.shared.Security;

import com.airbyte.api.models.shared.StreamConfiguration;

import com.airbyte.api.models.shared.StreamConfigurations;

public class Application {

public static void main(String[] args) {

try {

Airbyte sdk = Airbyte.builder()

.setSecurity(new Security("") {{

bearerAuth = "12345";

}})

.build();

com.airbyte.api.models.shared.ConnectionCreateRequest req = new ConnectionCreateRequest("0c31738c-0b2d-4887-b506-e2cd1c39cc35", "af0c3c67-aa61-419f-8922-95b0bf840e86") {{

name = "Stripe to BigQuery";

dataResidency = GeographyEnum.AUTO;

namespaceDefinition = NamespaceDefinitionEnum.DESTINATION;

namespaceFormat = "${SOURCE_NAMESPACE}";

nonBreakingSchemaUpdatesBehavior = NonBreakingSchemaUpdatesBehaviorEnum.IGNORE;

schedule = new ConnectionSchedule(ScheduleTypeEnum.MANUAL)

}};

CreateConnectionResponse res = sdk.connections.createConnection(req);

if (res.connectionResponse != null) {

// handle response

}

} catch (Exception e) {

// handle exception

}

}

}terraform {

required_providers {

airbyte = {

source = "airbytehq/airbyte"

version = "0.1.0"

}

}

provider "airbyte" {

bearer_auth = "12345"

}

resource "airbyte_connection" "stripe_bigquery" {

name = "Stripe to BigQuery"

source_id = "0c31738c-0b2d-4887-b506-e2cd1c39cc35"

destinationId = "af0c3c67-aa61-419f-8922-95b0bf840e86"

schedule = {

schedule_type = "manual"

}

}

}package main

import (

"fmt"

"strings"

"net/http"

"io/ioutil"

)

func main() {

url := "https://api.airbyte.com/v1/connections"

payload := strings.NewReader("{\"schedule\":{\"scheduleType\":\"manual\"},\"dataResidency\":\"auto\",\"namespaceDefinition\":\"destination\",\"namespaceFormat\":\"${SOURCE_NAMESPACE}\",\"nonBreakingSchemaUpdatesBehavior\":\"ignore\",\"name\":\"Stripe to BigQuery\",\"sourceId\":\"0c31738c-0b2d-4887-b506-e2cd1c39cc35\",\"destinationId\":\"af0c3c67-aa61-419f-8922-95b0bf840e86\"}")

req, _ := http.NewRequest("POST", url, payload)

req.Header.Add("accept", "application/json")

req.Header.Add("content-type", "application/json")

req.Header.Add("user-agent", "string")

req.Header.Add("authorization", "Bearer 12345")

res, _ := http.DefaultClient.Do(req)

defer res.Body.Close()

body, _ := ioutil.ReadAll(res.Body)

fmt.Println(res)

fmt.Println(string(body))

}const sdk = require('api')('@airbyte-api/v1#a922liamul7v');

sdk.auth('12345');

sdk.createConnection({

schedule: {scheduleType: 'manual'},

dataResidency: 'auto',

namespaceDefinition: 'destination',

namespaceFormat: '${SOURCE_NAMESPACE}',

nonBreakingSchemaUpdatesBehavior: 'ignore',

name: 'Stripe to BigQuery',

sourceId: '0c31738c-0b2d-4887-b506-e2cd1c39cc35',

destinationId: 'af0c3c67-aa61-419f-8922-95b0bf840e86'

})

.then(({ data }) => console.log(data))

.catch(err => console.error(err));require 'uri'

require 'net/http'

require 'openssl'

url = URI("https://api.airbyte.com/v1/connections")

http = Net::HTTP.new(url.host, url.port)

http.use_ssl = true

request = Net::HTTP::Post.new(url)

request["accept"] = 'application/json'

request["content-type"] = 'application/json'

request["user-agent"] = 'string'

request["authorization"] = 'Bearer 12345'

request.body = "{\"schedule\":{\"scheduleType\":\"manual\"},\"dataResidency\":\"auto\",\"namespaceDefinition\":\"destination\",\"namespaceFormat\":\"${SOURCE_NAMESPACE}\",\"nonBreakingSchemaUpdatesBehavior\":\"ignore\",\"name\":\"Stripe to BigQuery\",\"sourceId\":\"0c31738c-0b2d-4887-b506-e2cd1c39cc35\",\"destinationId\":\"af0c3c67-aa61-419f-8922-95b0bf840e86\"}"

response = http.request(request)

puts response.read_bodycurl --request POST \

--url https://api.airbyte.com/v1/connections \

--header 'accept: application/json' \

--header 'content-type: application/json' \

--header 'user-agent: string' \

--header 'authorization: Bearer 12345' \

--data '

{

"schedule": {

"scheduleType": "manual"

},

"dataResidency": "auto",

"namespaceDefinition": "destination",

"namespaceFormat": "${SOURCE_NAMESPACE}",

"nonBreakingSchemaUpdatesBehavior": "ignore",

"name": "Stripe to BigQuery",

"sourceId": "0c31738c-0b2d-4887-b506-e2cd1c39cc35",

"destinationId": "af0c3c67-aa61-419f-8922-95b0bf840e86"

}

'{

"connectionId": "9924bcd0-99be-453d-ba47-c2c9766f7da5"

}6. Sync Data

- You now have a Connection set up between your Source and Destination.

- You can now trigger Connection sync jobs manually. See: Trigger or Reset Job (or set them to sync on a cadence frequency)

- Trigger syncs from your product, or external orchestration tools (ex: Airflow, Dagster)

- An API response will be returned, containing the

jobIdof your newly-created job. - You are now syncing data!

- Note: It is also possible to custom configure your Connection to sync on a regular frequency rather than needing to trigger it manually each time.

- Note: Additional details on managing syncs in Airbyte Cloud can be viewed here: Manage syncs

import requests

url = "https://api.airbyte.com/v1/jobs"

payload = {

"jobType": "sync",

"connectionId": "9924bcd0-99be-453d-ba47-c2c9766f7da5"

}

headers = {

"accept": "application/json",

"content-type": "application/json",

"user-agent": "string",

"authorization": "Bearer 12345"

}

response = requests.post(url, json=payload, headers=headers)import airbyte

from airbyte.models import shared

s = airbyte.Airbyte(

security=shared.Security(

bearer_auth="12345",

),

)

req = shared.JobCreateRequest(

connection_id='9924bcd0-99be-453d-ba47-c2c9766f7da5',

job_type=shared.JobTypeEnum.SYNC,

)

res = s.jobs.create_job(req)

if res.job_response is not None:

# handle responseOkHttpClient client = new OkHttpClient();

MediaType mediaType = MediaType.parse("application/json");

RequestBody body = RequestBody.create(mediaType, "{\"jobType\":\"sync\",\"connectionId\":\"9924bcd0-99be-453d-ba47-c2c9766f7da5\"}");

Request request = new Request.Builder()

.url("https://api.airbyte.com/v1/jobs")

.post(body)

.addHeader("accept", "application/json")

.addHeader("content-type", "application/json")

.addHeader("user-agent", "string")

.addHeader("authorization", "Bearer 12345")

.build();

Response response = client.newCall(request).execute();import com.airbyte.api.Airbyte;

import com.airbyte.api.models.operations.CreateJobResponse;

import com.airbyte.api.models.shared.JobCreateRequest;

import com.airbyte.api.models.shared.JobTypeEnum;

import com.airbyte.api.models.shared.Security;

public class Application {

public static void main(String[] args) {

try {

Airbyte sdk = Airbyte.builder()

.setSecurity(new Security("") {{

bearerAuth = "12345";

}})

.build();

com.airbyte.api.models.shared.JobCreateRequest req = new JobCreateRequest("9924bcd0-99be-453d-ba47-c2c9766f7da5", JobTypeEnum.SYNC);

CreateJobResponse res = sdk.jobs.createJob(req);

if (res.jobResponse != null) {

// handle response

}

} catch (Exception e) {

// handle exception

}

}

}package main

import (

"fmt"

"strings"

"net/http"

"io/ioutil"

)

func main() {

url := "https://api.airbyte.com/v1/jobs"

payload := strings.NewReader("{\"jobType\":\"sync\",\"connectionId\":\"9924bcd0-99be-453d-ba47-c2c9766f7da5\"}")

req, _ := http.NewRequest("POST", url, payload)

req.Header.Add("accept", "application/json")

req.Header.Add("content-type", "application/json")

req.Header.Add("user-agent", "string")

req.Header.Add("authorization", "Bearer 12345")

res, _ := http.DefaultClient.Do(req)

defer res.Body.Close()

body, _ := ioutil.ReadAll(res.Body)

fmt.Println(res)

fmt.Println(string(body))

}const sdk = require('api')('@airbyte-api/v1#dahs1vlfu9tyt2');

sdk.auth('12345');

sdk.createJob({jobType: 'sync', connectionId: '9924bcd0-99be-453d-ba47-c2c9766f7da5'})

.then(({ data }) => console.log(data))

.catch(err => console.error(err));require 'uri'

require 'net/http'

require 'openssl'

url = URI("https://api.airbyte.com/v1/jobs")

http = Net::HTTP.new(url.host, url.port)

http.use_ssl = true

request = Net::HTTP::Post.new(url)

request["accept"] = 'application/json'

request["content-type"] = 'application/json'

request["user-agent"] = 'string'

request["authorization"] = 'Bearer 12345'

request.body = "{\"jobType\":\"sync\",\"connectionId\":\"9924bcd0-99be-453d-ba47-c2c9766f7da5\"}"

response = http.request(request)

puts response.read_bodycurl --request POST \

--url https://api.airbyte.com/v1/jobs \

--header 'accept: application/json' \

--header 'content-type: application/json' \

--header 'user-agent: string' \

--header 'authorization: Bearer 12345' \

--data '

{

"jobType": "sync",

"connectionId": "9924bcd0-99be-453d-ba47-c2c9766f7da5"

}

'{

"jobId": 1234,

"status": "running",

"jobType": "sync"

}7. Success

- You are now syncing data through the Connection created between your Source and Destination! Your end-user’s data is syncing to the Destination of your choice.

- Once the data is synced to the Destination, what you do with that data is entirely in your control. An example would be running Transformations using DBT, or other workflows that suit you.

- Note: To keep things organized and easier to manage, each business that uses your product should have its sources, destinations, and connections in its own workspace (step 2).

- Note: For each new end-user’s data source that needs to be synced (customer X’s new Hubspot account), you will repeat the Airbyte API requests described in steps 3 to 6.

You have now gone from 0 to 1 with Airbyte Embedded 🚀

You may have specific questions about this workflow, advanced workflows, or edge cases you hope to support. We would love to hear your feedback and answer your questions by reaching out to our support team!

References